In a Symfony2 application we’re developing for a client, a key feature is the import of some XLSX files containing previous representation of the data (let’s call it legacy data) into the system.

When the team started to work on this feature the client provided us with test files to analyze the structure, the data that needed to be imported, etc. This files were rather small, only a few hounders of kilobytes (almost all of the files were under 500Kb). So one of the developers in our team build the feature to import the data using the amazing PHPExcel library. And we all were happy for some time.

Once the client got its hands in the system and throw it a couple of big files to test it, then we all realize that the time taking to process the file was increasingly going out of control, along with the memory used by PHP.

The start

When I was tasked of reducing the import time, I thought that first of all I needed to create a baseline to see what could be improved, and perhaps get a glance of the bottleneck.

This time we were provided with a test file of about 650 records (~5MB worth of data).

When I first tested this on my local laptop I got a very nice error:

Fatal error: Allowed memory size of 134217728 bytes exhausted (tried to allocate 112 bytes)

Since we’re processing a single file with only 650 records something should be wrong, its wasn’t logical that such a small file exhausted that much memory (128MB configured in the php.ini by default). We even tried upping the PHP memory to see how far it will climb, but even with 512MB of RAM, the import process didn’t completed a single time.

First round

So configuring PHP to use 1GB of RAM did the trick and the import process of this 5MB file completed successfully, and I could start to benchmark using my preferred profiling tool for PHP Blackfire.

So we setup our local development environment and started to measure what was going on. We expected that the parsing done by the PHPExcel library was going to take must of our time, but we couldn’t imagine to what extent!

When we initially did our test this was the initial situation (reported by the backfire curl command):

Wall Time 2min 47s CPU Time 2min 46s I/O Time 946ms Memory 1GB

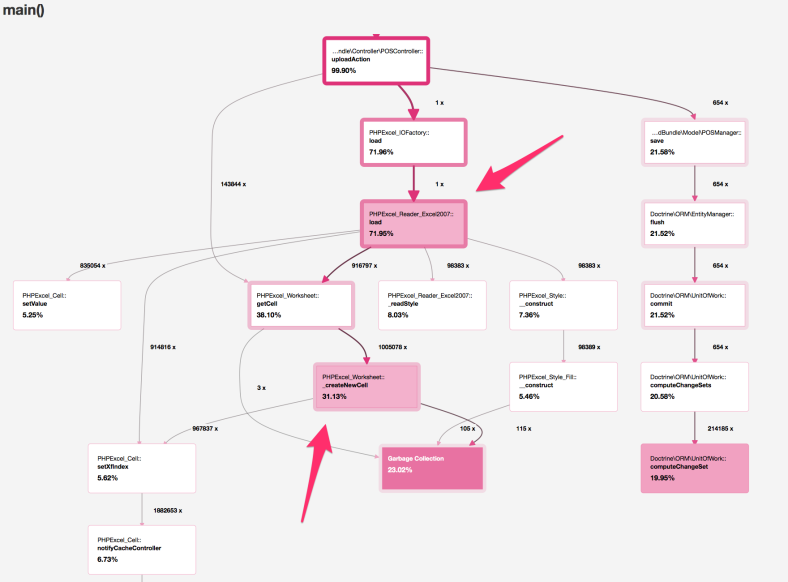

Two minutes to import our sample data was definitively a lot of time! So we took a look at the data provided by Blackfire, and for our surprise the load() method of the PHPExcel_Reader_Excel2007 class was consuming must of our time, and particularly the _createNewCell method along with the getCell method were causing a lot of garbage collection activity.

Perhaps we were using PHPExcel in the wrong way? Some digging on the PHPExcel sources code, some reading and some tweaking got us nowhere, the import time remained almost constant with small variations. The problem essentially is that PHPExcel builds an internal representation of the entire excel file, by default this cache es held in memory, causing the enormous amount of RAM that we spotted. We did a couple more of experiments and concluded that using PHPExcel for this import task may lead to troubles in the future, specially since we were expecting files with at least twice the data we were using for testing.

Once we opened our eyes and started to look around we found a couple of promising libraries spout and spreadsheet-parser. Both seemed really interesting and since all of our input files are XLSX files, we decided that spreadsheet-parser was a good fit for our use case (at least in theory), so we started testing.

Second round

In this occasion just by switching the library and using almost the example code provided in the README file we got a totally different situation:

Wall Time 33s CPU Time 32.2s I/O Time 842ms Memory 10.1MB

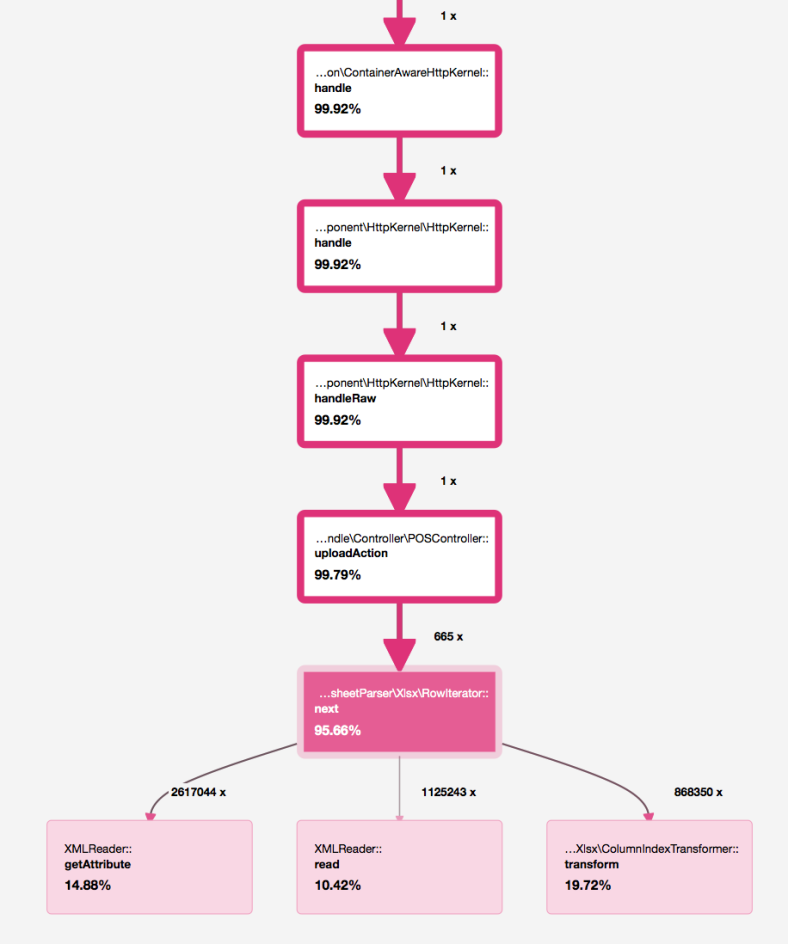

This values for time and memory usage were more inline with our expectations, just 33s to import the data and only 10MB of RAM used, this looked like a win! A reduction on about 5x the previous implementation time and also a reduction of 100x in the memory usage sure looked like a victory. But before called a day we decided to checkout what was going on in our import action.

A look at the Blackfire profile revealed a much more sane situation but something caught my eye, we were expending a lot of time on the computeChangeSet method of the UnitOfWork class from Doctrine. Usually I wouldn’t expect to see so much activity here specially since we’re importing new records into our database, meaning that we have no previous data in our entity objects to compare with.

Third round

A look at the code revealed that the UnitOfWork was being “flushed” at a per record rate! which was totally unnecessary (yep rookie mistake). At this point a git blame became handy, but just for teasing among developers 🙂 (just kidding, we actually don’t do that).

So we tweaked the code to avoid flushing each record into the database and did a final test:

Wall Time 13.3s CPU Time 13.2s I/O Time 124ms Memory 16.9MB

We reduced again the processing time to about half of the previous import time, down from 33s to around 13s. Another win! And taking a look at the profile on backfire:

Revealed that now our application spent the time iterating over the set of rows read from the XLSX file instead of computing changes in the doctrine unit of work.

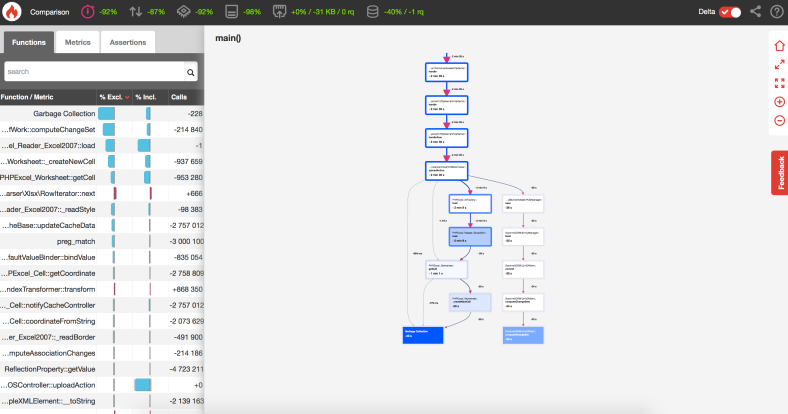

Finally let’s take a look at the comparison graph:

This great feature of Blackfire provides a quick comparison between two profiles, in this case we got improvements in every possible aspect. This feature is a live saver because with just a couple of clicks you get a nice graphical representation of how much your performance/metrics changed.

Conclusions

Just by changing our parsing library and fixing a couple of rookie mistakes in the code we were able to significantly reduce the time and resources spent in our data import process.

I want to highlight that I don’t think that PHPExcel is a bad library, on the contrary is a great great library. We still use PHPExcel in other projects to export data and to import excel (xls) files, plus PHPExcel supports more formats than any other library we’ve come around, but for our particular use case it wasn’t just performant enough.

There are some techniques that could be used to improve PHPExcel performance, several posts could be found on the web about this subject. And we tried some of this techniques, but couldn’t match the results that we got by using akeneo-labs/spreadsheet-parser.

Note: All the tests were done issuing a call to the upload action of our Symfony2 app directly from the command line:

blackfire curl 'http://sfdemo.dev/pos/upload' -H 'Cookie: PHPSESSID=silq9dgk9mbigb41e513746c36' -F "acme_bundle_upload_type[file]=@test-data.xlsx"

Blackfire is an amazing tool (I can’t stress this enough), sure, perhaps we could have a similar result by tweaking our code blindly but it would’ve taken longer. By using Blackfire we based every decision in the data provided by our application and it helped us to be certain that we were moving in the right direction. Perhaps other tools could be used, but Blackfire provided a wealth of performance data, including a lot of cute graphs for us to show here 🙂 seriously, the Blackfire graphs give a really great summary of information in an understandable form.